I have verified that the OpsGenie plugin opsgenie_issues.py is working correctly. I created a new site with only 2 hosts and only the bare minimum of configurations for OpsGenie notifications. This basic environment is able to create, acknowledge and Close alerts in OpsGenie.

In my production site, cmk_notify is only able to create and acknowledge an alert in OpsGenie. CMK is not passing the event SVC_PROBLEM_ID for only the “Close” to the opsgenie_issues.py plugin.

How do I troubleshoot this issue?

I have turned ON - Global Settings > Logging of the notification mechanics.

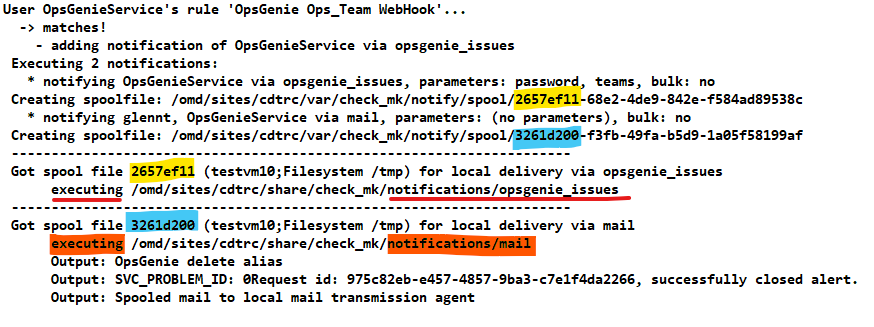

The following is a successful acknowledge update to OpsGenie from the ~/var/log/notify.log

2020-09-18 10:47:31 Got spool file 133200ce (testvm10;Filesystem /tmp) for local delivery via opsgenie_issues

2020-09-18 10:47:31 executing /omd/sites/cdtrc/share/check_mk/notifications/opsgenie_issues

2020-09-18 10:47:31 Output: OpsGenie acknowledge alias

2020-09-18 10:47:31 Output: SVC_PROBLEM_ID: 92385Request id: c129d63d-a0ea-4be3-8c81-79500fb74060, successfully added acknowledgedment.

2020-09-18 10:47:31 Output: Spooled mail to local mail transmission agent

The following is a failed close update to OpsGenie from the ~/var/log/notify.log

2020-09-18 10:49:00 Got spool file 3261d200 (testvm10;Filesystem /tmp) for local delivery via mail

2020-09-18 10:49:00 executing /omd/sites/cdtrc/share/check_mk/notifications/mail

2020-09-18 10:49:00 Output: OpsGenie delete alias

2020-09-18 10:49:00 Output: SVC_PROBLEM_ID: 0Request id: 975c82eb-e457-4857-9ba3-c7e1f4da2266, successfully closed alert.

2020-09-18 10:49:00 Output: Spooled mail to local mail transmission agent

There is no indication of why SVC_PROBLEM_ID: 0

I found that Check_MK is mixing up which spool file and/or which notification method to execute. This ONLY happens when doing the Close ( cmk status = OK ).

How can I get this fixed?

We are seeing the same issue here in that our OpsGenie alerts aren’t closing but are opening just fine. I haven’t turned on my global logging but will do so if necessary to help debug this, as it’s causing me a lot of extra effort to go in and close alerts. In an hour today I had around 50 alerts generated and although they probably shouldn’t have all been generated, I now have OpsGenie alerts and Jira tickets to deal with for all these issues.

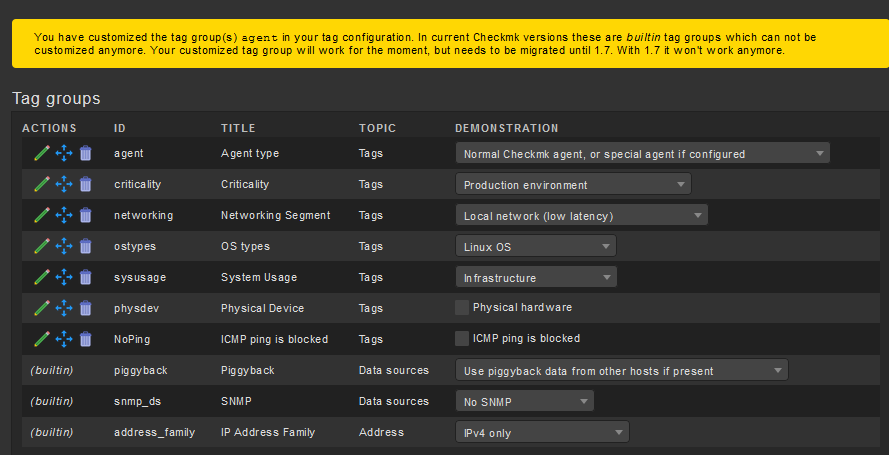

Do you also have any warnings in WATO > tags about “You have customized the tag group …”?

I am just trying to determine if this is related.

No, we don’t have the customized tags warning, so it doesn’t appear to be connected to that.

I’m actually not even seeing my installation trying to send an “OK” to close, either, now that I’ve started digging in the log files.

To increase notification logging I changed;

Global Setting > Monitoring Core > Logging of the notification mechanics = Enable notification logging

To also log the Nagios internal variables being used;

Global Setting > Notifications > notification log level = Full dump of all variables and command.

The log to follow is ~/var/log/notify.log

To view what is being received in OpsGenie;

Settings > Logs

Look for “INFO INTEGRATIONLOG” the “Raw” view has the most data.

I have made an interesting observation. When the alert is for a host being down, and the host is brought back up, check_mk properly closed the alert. However, it still doesn’t close alerts when they are for critical issues that are fixed.

Cool I’ve encountered the same issue and solved it. I was checking how I could notice the community of my findings. The issue in this is that the check_mk notify is lacking 2 extra parameters.

It is missing the NOTIFY_LASTHOSTPROBLEMID and NOTIFY_LASTSERVICEPROBLEMID. As you can read from the original nagios parameters ( https://assets.nagios.com/downloads/nagioscore/docs/nagioscore/3/en/macrolist.html) you can read that nagios in case of an OK the HOSTPROBLEMID/SERVICEPROBLEMID set to ‘0’. The old ID will be placed in LASTHOSTPROBLEMID/LASTSERVICEPROBLEMID.

So to fix you have to change two things… first the starting point for the notif

# diff check_mk_templates.cfg.new check_mk_templates.cfg

359,360d358

< NOTIFY_LASTHOSTPROBLEMID='$LASTHOSTPROBLEMID$' \

< NOTIFY_LASTSERVICEPROBLEMID='$LASTSERVICEPROBLEMID$' \

And then you have to modify the file opsgenie_issues.py

alias = 'HOST_PROBLEM_ID: %s' % context['HOSTPROBLEMID']

if alias == 'HOST_PROBLEM_ID: 0':

alias = 'HOST_PROBLEM_ID: %s' % context['LASTHOSTPROBLEMID']

and for the services

alias = 'SVC_PROBLEM_ID: %s' % context['SERVICEPROBLEMID']

if alias == 'SVC_PROBLEM_ID: 0':

alias = 'SVC_PROBLEM_ID: %s' % context['LASTSERVICEPROBLEMID']

I also had to modify the opsgenie_issues.py to make use of a proxy…

This topic was automatically closed 365 days after the last reply. New replies are no longer allowed. Contact @fayepal if you think this should be re-opened.