Hi,

We are experiencing the same problem on our data centers (DC). I don’t have the permission to install xinetd on servers. The current checkmk configuration is pretty basic.

Did anyone check for SELinux? Is it enabled and enforcing on affected hosts?

Selinux is disabled on every server in DC so it is unlikely to be the reason.

Did anyone check the actual agent output during the time of CPU stress (cmk -d $HOSTNAME) ? Maybe one can understand which section causes the load.

I used telnet to connect to port 6556 and the output looked Ok. At least at first glance.

Most common case when agent “crashes”:

$ ps axjf

PPID PID PGID SID TTY TPGID STAT UID TIME COMMAND

1 1377150 1377150 1377150 ? -1 Ss 0 34:05 /bin/bash /usr/bin/check_mk_agent

1377150 1624760 1377150 1377150 ? -1 R 0 34689:11 \_ /bin/bash /usr/bin/check_mk_agent

1624760 1624761 1377150 1377150 ? -1 Z 0 0:00 \_ [systemctl] <defunct>

$ top

top - 11:33:44 up 313 days, 17:42, 1 user, load average: 1.86, 1.46, 1.32

Tasks: 254 total, 3 running, 250 sleeping, 0 stopped, 1 zombie

%Cpu0 : 0.0 us, 0.0 sy, 0.0 ni,100.0 id, 0.0 wa, 0.0 hi, 0.0 si, 0.0 st

%Cpu1 : 0.0 us, 0.0 sy, 0.0 ni,100.0 id, 0.0 wa, 0.0 hi, 0.0 si, 0.0 st

%Cpu2 :100.0 us, 0.0 sy, 0.0 ni, 0.0 id, 0.0 wa, 0.0 hi, 0.0 si, 0.0 st

%Cpu3 : 5.9 us, 5.9 sy, 0.0 ni, 88.2 id, 0.0 wa, 0.0 hi, 0.0 si, 0.0 st

MiB Mem : 7944.5 total, 638.8 free, 3263.1 used, 4042.6 buff/cache

MiB Swap: 4092.0 total, 4071.5 free, 20.5 used. 3945.8 avail Mem

PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

1624760 root 20 0 28480 10148 1272 R 88.2 0.1 34689:08 check_m+

1 root 20 0 255968 14080 9164 S 0.0 0.2 1329:17 systemd

2 root 20 0 0 0 0 S 0.0 0.0 0:36.93 kthreadd

Script “/usr/bin/check_mk_agent” hangs and its subprocess becomes a zombie as it is not collected. The 100% usage of 1 CPU core could be caused by some sort of infinite loop (which executes no commands, doesn’t temporarily release CPU with sleep, …).

The loop at the end of agent script looks ok though:

# if MK_LOOP_INTERVAL is set, we assume we're a 'simple' systemd service

if [ -n "$MK_LOOP_INTERVAL" ]; then

while sleep "$MK_LOOP_INTERVAL"; do

# Note: this will not output anything if MK_RUN_SYNC_PARTS=false, which is the intended case.

# Anyway: rather send it to /dev/null than risk leaking unencrypted output.

MK_LOOP_INTERVAL="" main >/dev/null

done

fi

We attached strace to process and got no output.

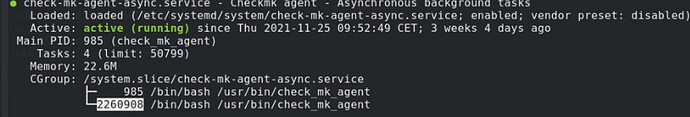

Restart of check-mk-agent-async.service always releases the cpu.

The interval between crashes varies from 7 hours up to 5 or more days.

So far the problem was detected on:

Count; Release Kernel

1 ; CentOS Linux release 8.2.2004 (Core) ;4.18.0-193.14.2.el8_2.x86_64

19 ; CentOS Linux release 8.2.2004 (Core) ;4.18.0-193.19.1.el8_2.x86_64

3 ; CentOS Linux release 8.2.2004 (Core) ;4.18.0-193.28.1.el8_2.x86_64

5 ; CentOS Linux release 8.3.2011 ;4.18.0-240.10.1.el8_3.x86_64

1 ; AlmaLinux release 8.4 (Electric Cheetah) ;4.18.0-240.22.1.el8_3.x86_64

Problem was **NOT** encountered on RHEL 7 or 8.

Updating agent’s package from p12 to p16 didn’t help:

check-mk-agent-2.0.0p12-1.eba2fbc587cbf845.noarch

check-mk-agent-2.0.0p16-1.ecc5e7dc7c2635d8.noarch

I created 12 virtual machines for testing and updated seperate parts (systemd, kernel, systemd + kernel, full system update). It looked as if the full update on CentOS 8 helps. But that is not a viable solution for data center.

The most stable solution was to install the agent and overwrite it with script from github under relase 2.0 ( https://github.com/tribe29/checkmk/blob/2.0.0/agents/check_mk_agent.linux ):

-

Install check-mk-agent package

check-mk-agent-2.0.0p12-1.eba2fbc587cbf845.noarch or

check-mk-agent-2.0.0p16-1.ecc5e7dc7c2635d8.noarch

-

Copy checkmk agent script from github (release 2.0)

$ wget https://github.com/tribe29/checkmk/raw/2.0.0/agents/check_mk_agent.linux

$ cp -vf check_mk_agent.linux /usr/bin/check_mk_agent

- Reload services

#Reload systemd

$ systemctl daemon-reload

#Checkmk agent - restart socket service

$ systemctl restart check-mk-agent.socket

#Checkmk agent - restart Asynchronous background tasks

$ systemctl restart check-mk-agent-async.service

Only other file used was /etc/check_mk/encryption.cfg to enable encryption.

After this procedure there were no crashes observed so far.

Tried the same with script from master branch (github), but discovery reported that df command has problems. Dev branch, so i’ll try again later

I hope any of this information helps.