CMK version: 2.0.0p19 (CRE)

OS version: CentOS 8

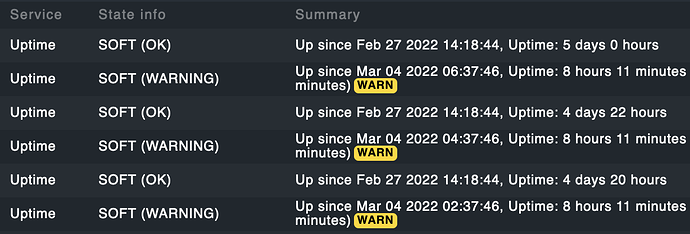

Error: After an service discovery has ran it seems to use old cached data for the next check. This is an example where the uptime of an Cisco switch is flapping between 8h 11min and the actual uptime.

This can happen with all the checks on all our devices and results in flapping alerts and makes the graphs unusable

Output of “cmk --debug -vvn hostname”:

The debug commads results in an python exception:

File "/omd/sites/noc2/bin/cmk", line 79, in <module>

errors = config.load_all_agent_based_plugins(check_api.get_check_api_context)

File "/omd/sites/noc2/lib/python3/cmk/base/config.py", line 1428, in load_all_agent_based_plugins

errors.extend(load_checks(get_check_api_context, filelist))

File "/omd/sites/noc2/lib/python3/cmk/base/config.py", line 1576, in load_checks

errors = (_extract_agent_and_snmp_sections(validate_creation_kwargs=did_compile) +

File "/omd/sites/noc2/lib/python3/cmk/base/config.py", line 2018, in _extract_agent_and_snmp_sections

raise MKGeneralException(exc) from exc

cmk.utils.exceptions.MKGeneralException ```