check_mk.socket: Too many incoming connections (4) from source x.x.x.x, dropping connection

Keep getting this error sporadically, hilariously.

Monitoring from a remote LTE/ADSL connection very hard to overwhelm the remote servers 1gb+ connection.

Yes I can edit the /etc/systemd/system/check_mk.socket but how come / what causes these sockets to not be released in a timely manner.

Restarting the systemctl restart check_mk.socket service doesn’t seem to fix it either.

Another 24 hours later and a bunch of hosts show check_mk inventory / service offline due to this in their agent log.

I have my checks set to 3 minutes, which I thought would be far enough to prevent this sort of issue as well.

May 01 11:06:13 ns2 systemd[1]: check_mk.socket: Too many incoming connections (31) from source 192.168.192.3, dropping connection.

May 01 11:08:59 ns2 systemd[1]: check_mk.socket: Too many incoming connections (31) from source 192.168.192.3, dropping connection.

May 01 11:09:13 ns2 systemd[1]: check_mk.socket: Too many incoming connections (31) from source 192.168.192.3, dropping connection.

May 01 11:10:43 ns2 systemd[1]: check_mk.socket: Too many incoming connections (31) from source 192.168.192.3, dropping connection.

May 01 11:11:59 ns2 systemd[1]: check_mk.socket: Too many incoming connections (31) from source 192.168.192.3, dropping connection.

May 01 11:12:13 ns2 systemd[1]: check_mk.socket: Too many incoming connections (31) from source 192.168.192.3, dropping connection.

May 01 11:14:59 ns2 systemd[1]: check_mk.socket: Too many incoming connections (31) from source 192.168.192.3, dropping connection.

May 01 11:15:13 ns2 systemd[1]: check_mk.socket: Too many incoming connections (31) from source 192.168.192.3, dropping connection.

May 01 11:15:45 ns2 systemd[1]: check_mk.socket: Too many incoming connections (31) from source 192.168.192.3, dropping connection.

May 01 11:17:59 ns2 systemd[1]: check_mk.socket: Too many incoming connections (31) from source 192.168.192.3, dropping connection.

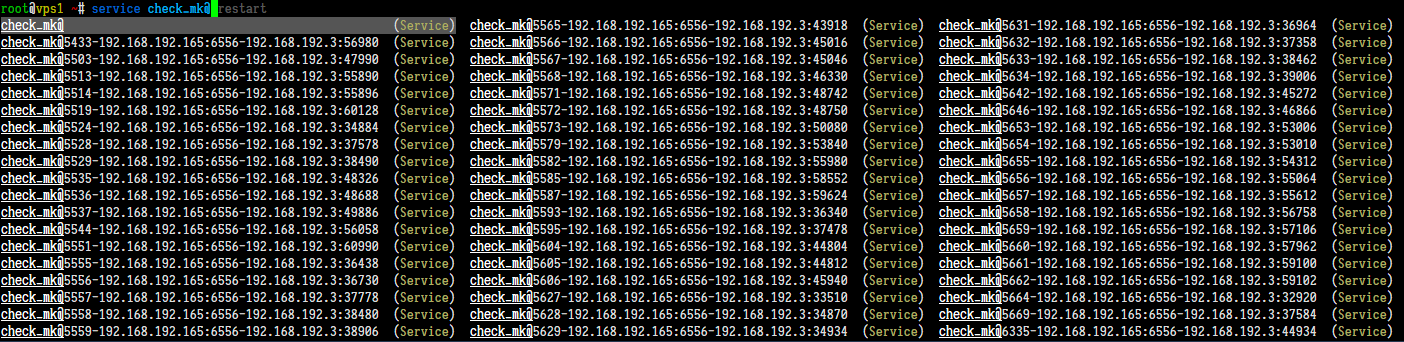

The only thing that seems to work once a host has gone like this is

systemctl restart check_mk.socket;systemctl daemon-reload;systemctl status check_mk.socket

Its super frustrating as reliable monitoring alerts is the only reason to even monitor something. Getting blasted by crits that are caused by the monitoring system makes me sad.