We are able to create a monitor/rule for a single kubernetes cluster following the guide here: https://checkmk.com/cms_monitoring_kubernetes.html . We use the raw edition of checkmk, version 1.6.0p16.

Problem: We cannot securely (via HTTPS) monitor more than one kubernetes cluster on a single instance of checkmk.

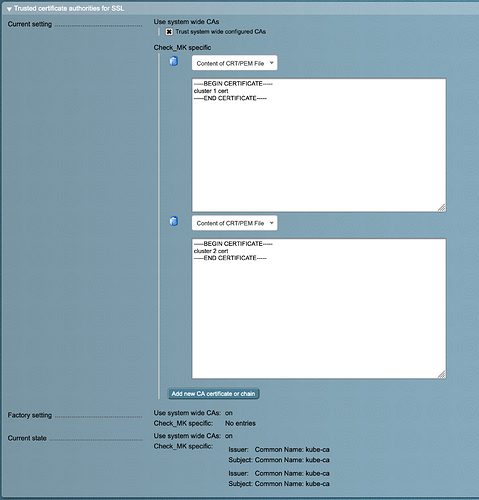

We have two k8s clusters, cluster-1 and cluster-2. We have created the check-mk ServiceAccount and namespace in both clusters, and we obtain the certificate and token from the secret in the respective clusters. Following the documentation linked above, we can configure a single cluster to be monitored by checkmk, but whenever we go to configure the next cluster, it fails due to SSL errors. It’s almost as if CheckMk is using the first cert it finds under Global Settings > Site Management. We have two certs defined. (I had a screenshot for this, but I got an error saying new users can only upload a single media item per post.)

Again, if I configure cluster-1 monitoring first, cluster-1 will be monitored. Once I add cluster-2 monitoring it fails due to SSL errors. However, if I reverse the order in which I create the monitor, cluster-2 will be monitored, but cluster-1 will fail due to SSL errors.

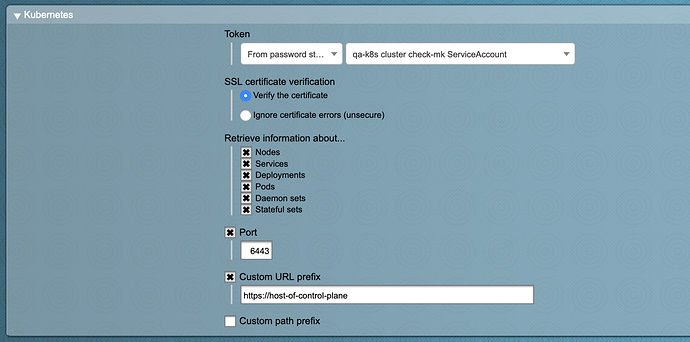

Our theory is that when creating the kubernetes special agent config, we only specify the token (password) to use, but have no option to specify which cert to use. E.g.:

It is not acceptable for us to use the same SSL certificate across multiple clusters for production. That’s an absolute non-starter.

Any advice or troubleshooting steps that can be provided would be greatly appreciated.