ok thanks, I moved this away, now to

OMD[SAL]:~/local/share/check_mk/checks$ tree

.

-- nvidia_smi

OMD[SAL]:~/local/share/check_mk/checks$ pwd

/omd/sites/SAL/local/share/check_mk/checks

the file looks like :

OMD[SAL]:~/local/share/check_mk/checks$ less nvidia_smi

#!/usr/bin/env python

def nvidia_smi_parse(info):

data = {}

for i, line in enumerate(info):

if len(line) != 4:

continue # Skip unexpected lines

pool_name, pm_type, metric, value = line

item = '%s [%s]' % (pool_name, pm_type)

if item not in data:

data[item] = {}

data[item][metric] = int(value)

return data

def inventory_nvidia_smi(info):

...

and

OMD[SAL]:~/local/share/check_mk/checks$ ls -l nvidia_smi

-rwxr-xr-x 1 SAL SAL 4015 Sep 12 11:46 nvidia_smi*

now debug:

OMD[SAL]:~/local/share/check_mk/checks$ cmk --debug -vvII salllgpuc01

Discovering services and host labels on: salllgpuc01

salllgpuc01:

+ FETCHING DATA

Source: SourceType.HOST/FetcherType.TCP

[cpu_tracking] Start [7f8f513fb550]

[TCPFetcher] Fetch with cache settings: DefaultAgentFileCache(base_path=PosixPath('/omd/sites/SAL/tmp/check_mk/cache/salllgpuc01'), max_age=MaxAge(checking=0, discovery=120, inventory=120), disabled=False, use_outdated=False, simulation=False)

Using data from cache file /omd/sites/SAL/tmp/check_mk/cache/salllgpuc01

Got 246023 bytes data from cache

[TCPFetcher] Use cached data

Closing TCP connection to 10.141.112.10:6556

[cpu_tracking] Stop [7f8f513fb550 - Snapshot(process=posix.times_result(user=0.0, system=0.0, children_user=0.0, children_system=0.0, elapsed=0.0))]

Source: SourceType.HOST/FetcherType.PIGGYBACK

[cpu_tracking] Start [7f8f513fb880]

[PiggybackFetcher] Fetch with cache settings: NoCache(base_path=PosixPath('/omd/sites/SAL/tmp/check_mk/data_source_cache/piggyback/salllgpuc01'), max_age=MaxAge(checking=0, discovery=120, inventory=120), disabled=False, use_outdated=False, simulation=False)

[PiggybackFetcher] Execute data source

No piggyback files for 'salllgpuc01'. Skip processing.

No piggyback files for '10.141.112.10'. Skip processing.

[cpu_tracking] Stop [7f8f513fb880 - Snapshot(process=posix.times_result(user=0.0, system=0.0, children_user=0.0, children_system=0.0, elapsed=0.0))]

+ PARSE FETCHER RESULTS

Source: SourceType.HOST/FetcherType.TCP

Loading autochecks from /omd/sites/SAL/var/check_mk/autochecks/salllgpuc01.mk

Trying to acquire lock on /omd/sites/SAL/var/check_mk/persisted/salllgpuc01

Got lock on /omd/sites/SAL/var/check_mk/persisted/salllgpuc01

Releasing lock on /omd/sites/SAL/var/check_mk/persisted/salllgpuc01

Released lock on /omd/sites/SAL/var/check_mk/persisted/salllgpuc01

Stored persisted sections: lnx_packages, lnx_distro, lnx_cpuinfo, dmidecode, lnx_uname, lnx_video, lnx_ip_r, lnx_sysctl, lnx_block_devices

Using persisted section SectionName('lnx_packages')

Using persisted section SectionName('lnx_distro')

Using persisted section SectionName('lnx_cpuinfo')

Using persisted section SectionName('dmidecode')

Using persisted section SectionName('lnx_uname')

Using persisted section SectionName('lnx_video')

Using persisted section SectionName('lnx_ip_r')

Using persisted section SectionName('lnx_sysctl')

Using persisted section SectionName('lnx_block_devices')

-> Add sections: ['check_mk', 'chrony', 'cifsmounts', 'cpu', 'df', 'diskstat', 'dmidecode', 'ipmi', 'ipmi_discrete', 'job', 'kernel', 'lnx_block_devices', 'lnx_cpuinfo', 'lnx_distro', 'lnx_if', 'lnx_ip_r', 'lnx_packages', 'lnx_sysctl', 'lnx_thermal', 'lnx_uname', 'lnx_video', 'local', 'md', 'mem', 'mounts', 'nfsmounts', 'nvidia_smi', 'postfix_mailq', 'postfix_mailq_status', 'ps_lnx', 'systemd_units', 'tcp_conn_stats', 'uptime', 'vbox_guest']

Source: SourceType.HOST/FetcherType.PIGGYBACK

No persisted sections loaded

-> Add sections: []

Received no piggyback data

+ EXECUTING HOST LABEL DISCOVERY

Trying host label discovery with: check_mk, chrony, cifsmounts, cpu, df, diskstat, dmidecode, ipmi, ipmi_discrete, job, kernel, lnx_block_devices, lnx_cpuinfo, lnx_distro, lnx_if, lnx_ip_r, lnx_packages, lnx_sysctl, lnx_thermal, lnx_uname, lnx_video, local, md, mem, mounts, nfsmounts, nvidia_smi, postfix_mailq, postfix_mailq_status, ps_lnx, systemd_units, tcp_conn_stats, uptime, vbox_guest

cmk/os_family: linux (check_mk)

+ PERFORM HOST LABEL DISCOVERY

Trying to acquire lock on /omd/sites/SAL/var/check_mk/discovered_host_labels/salllgpuc01.mk

Got lock on /omd/sites/SAL/var/check_mk/discovered_host_labels/salllgpuc01.mk

Releasing lock on /omd/sites/SAL/var/check_mk/discovered_host_labels/salllgpuc01.mk

Released lock on /omd/sites/SAL/var/check_mk/discovered_host_labels/salllgpuc01.mk

+ EXECUTING DISCOVERY PLUGINS (34)

Trying discovery with: ps, domino_tasks, lnx_if, check_mk_only_from, cpu_threads, chrony, job, mem_win, mounts, kernel_performance, k8s_stats_network, uptime, diskstat, cifsmounts, kernel_util, local, systemd_units_services_summary, ipmi, systemd_units_services, nvidia_smi, mem_vmalloc, kernel, lnx_thermal, df, tcp_conn_stats, docker_container_status_uptime, vbox_guest, postfix_mailq, postfix_mailq_status, check_mk_agent_update, mem_linux, md, nfsmounts, cpu_loads

Trying to acquire lock on /omd/sites/SAL/var/check_mk/autochecks/salllgpuc01.mk

Got lock on /omd/sites/SAL/var/check_mk/autochecks/salllgpuc01.mk

Releasing lock on /omd/sites/SAL/var/check_mk/autochecks/salllgpuc01.mk

Released lock on /omd/sites/SAL/var/check_mk/autochecks/salllgpuc01.mk

1 check_mk_agent_update

1 chrony

1 cpu_loads

1 cpu_threads

3 df

1 diskstat

1 ipmi

1 kernel_performance

1 kernel_util

4 lnx_if

2 lnx_thermal

1 mem_linux

4 mounts

125 nfsmounts

1 postfix_mailq

1 postfix_mailq_status

1 systemd_units_services_summary

1 tcp_conn_stats

1 uptime

SUCCESS - Found 152 services, 1 host labels

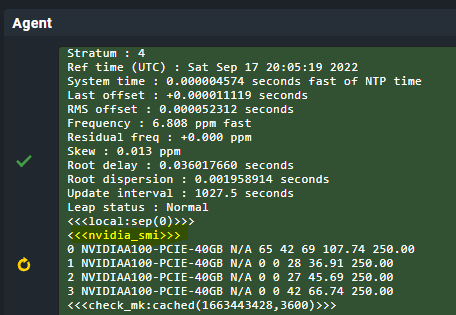

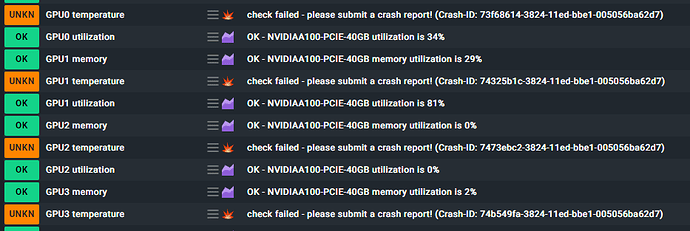

I can see nvidia_smi. But that’s it. This after cmk -O and cmk -II salllgpuc01 .