I a very beginner and want to create a snmp check using a custom MIB. I have large struggles with it.

I have a Device which is called Tintri VMstore that I want to be check.

I have the vendor MIB, which somewhat works… but I’m not able to do an automatic scan using that MIB.

I placed the MIB under /omd/ sites/ Tintri/var/check_mk/snmpwalks/Tintri-MIB. txt and some other folder.

From what I read,I have to create a customer SNMP plugin but as I’m a beginner I have issues with that.

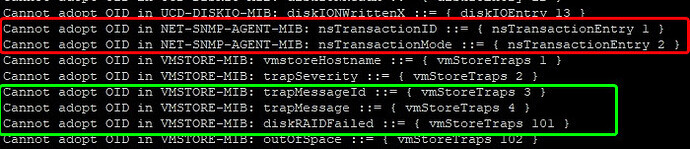

When I do an SNMP walk from CLI using root user, it can see the VMstore MIB, but has some issues.

Last output from snmpwalk under root is:

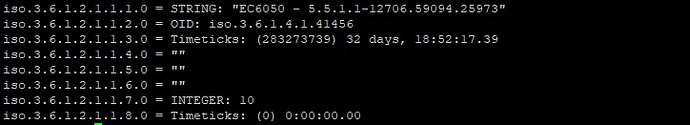

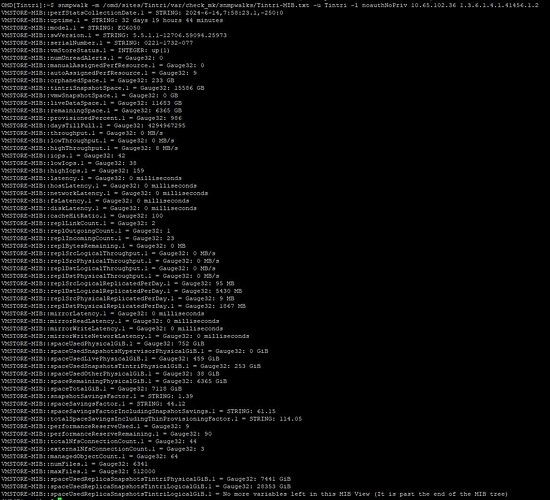

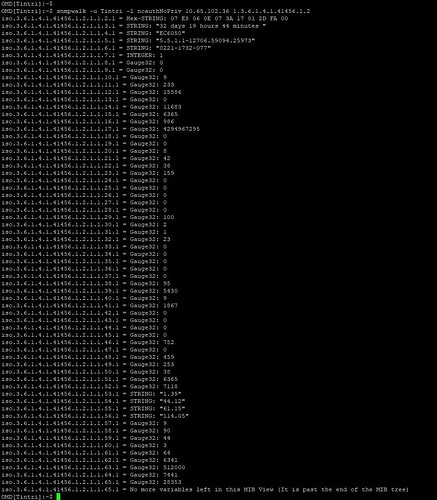

If I do the snmpwalk under the site account and specify a specific OID or the “global” OID to scan for I get a good output with all the MIB contents.

with specifying the MIB:

without specifying the MIB:

I have found some sample SNMP-Plugins that I modified, but first of all not sure how to really use those and if they are correct…

that are the SNMP-Plugin I created from samples I found and that are located at

Iocal/lib/check_mk/base /plugins /agent_based/

# SNMP OIDs:

# "1.3.6.1.4.1.41456.1.2.1" - vmstoreHostname

# "1.3.6.1.4.1.41456.1.2.2.1.46" - spaceUsedPhysicalGiB

# "1.3.6.1.4.1.41456.1.2.2.1.47" - spaceUsedSnapshotsHypervisorPhysicalGiB

# "1.3.6.1.4.1.41456.1.2.2.1.48" - spaceUsedLivePhysicalGiB

# "1.3.6.1.4.1.41456.1.2.2.1.49" - spaceUsedSnapshotsTintriPhysicalGiB

# "1.3.6.1.4.1.41456.1.2.2.1.50" - spaceUsedOtherPhysicalGiB

# "1.3.6.1.4.1.41456.1.2.2.1.51" - spaceRemainingPhysicalGiB

# "1.3.6.1.4.1.41456.1.2.2.1.52" - spaceTotalGiB

# "1.3.6.1.4.1.41456.1.2.2.1.53" - snapshotSavingsFactor

# "1.3.6.1.4.1.41456.1.2.2.1.54" - spaceSavingsFactor

# "1.3.6.1.4.1.41456.1.2.2.1.55" - spaceSavingsFactorIncludingSnapshotSavings

# "1.3.6.1.4.1.41456.1.2.2.1.56" - totalSpaceSavingsIncludingThinProvisioningFactor

# "1.3.6.1.4.1.41456.1.2.2.1.57" - performanceReserveUsed

# "1.3.6.1.4.1.41456.1.2.2.1.58" - performanceReserveRemaining

# "1.3.6.1.4.1.41456.1.2.2.1.18" - throughput

# "1.3.6.1.4.1.41456.1.2.2.1.19" - lowThroughput

# "1.3.6.1.4.1.41456.1.2.2.1.20" - highThroughput

# "1.3.6.1.4.1.41456.1.2.2.1.21" - iops

# "1.3.6.1.4.1.41456.1.2.2.1.22" - lowIops

# "1.3.6.1.4.1.41456.1.2.2.1.23" - highIops

# "1.3.6.1.4.1.41456.1.2.2.1.24" - latency

# "1.3.6.1.4.1.41456.1.2.2.1.25" - hostLatency

# "1.3.6.1.4.1.41456.1.2.2.1.26" - networkLatency

# "1.3.6.1.4.1.41456.1.2.2.1.27" - fsLatency

# "1.3.6.1.4.1.41456.1.2.2.1.28" - diskLatency

# "1.3.6.1.4.1.41456.1.2.2.1.29" - cacheHitRatio

# "1.3.6.1.4.1.41456.1.2.2.1.30" - replLinkCount

# "1.3.6.1.4.1.41456.1.2.2.1.31" - replOutgoingCount

# "1.3.6.1.4.1.41456.1.2.2.1.32" - replIncomingCount

# "1.3.6.1.4.1.41456.1.2.2.1.33" - replBytesRemaining

# "1.3.6.1.4.1.41456.1.2.2.1.34" - replSrcLogicalThroughput

# "1.3.6.1.4.1.41456.1.2.2.1.35" - replSrcPhysicalThroughput

# "1.3.6.1.4.1.41456.1.2.2.1.36" - replDstLogicalThroughput

# "1.3.6.1.4.1.41456.1.2.2.1.37" - replDstPhysicalThroughput

# "1.3.6.1.4.1.41456.1.2.2.1.38" - replSrcLogicalReplicatedPerDay

# "1.3.6.1.4.1.41456.1.2.2.1.39" - replDstLogicalReplicatedPerDay

# "1.3.6.1.4.1.41456.1.2.2.1.40" - replSrcPhysicalReplicatedPerDay

# "1.3.6.1.4.1.41456.1.2.2.1.41" - replDstPhysicalReplicatedPerDay

# "1.3.6.1.4.1.41456.1.2.2.1.42" - mirrorLatency

# "1.3.6.1.4.1.41456.1.2.2.1.43" - mirrorReadLatency

# "1.3.6.1.4.1.41456.1.2.2.1.44" - mirrorWriteLatency

# "1.3.6.1.4.1.41456.1.2.2.1.45" - mirrorWriteNetworkLatency

# "1.3.6.1.4.1.41456.1.2.2.1.17" - daysTillFull

# "1.3.6.1.4.1.41456.1.2.2.1.11" - orphanedSpace

# "1.3.6.1.4.1.41456.1.2.2.1.5" - swVersion

# "1.3.6.1.4.1.41456.1.2.2.1.4" - model

# "1.3.6.1.4.1.41456.1.2.2.1.3" - uptime

# "1.3.6.1.4.1.41456.1.2.2.1.6" - serialNumber

# "1.3.6.1.4.1.41456.1.2.2.1.7" - vmStoreStatus

# "1.3.6.1.4.1.41456.1.2.2.1.59" - totalNfsConnectionCount

# "1.3.6.1.4.1.41456.1.2.2.1.61" - managedObjectCount

# "1.3.6.1.4.1.41456.1.2.2.1.62" - numFiles

# "1.3.6.1.4.1.41456.1.2.2.1.63" - maxFiles

factory_settings["tintri_vmstore_levels"] = {

# Define your levels here

}

def inventory_tintri_vmstore(info):

inv = []

# Inventory logic goes here

for line in info:

# Parse the line and append the inventory item

inv.append((line[0], None))

return inv

def check_tintri_vmstore(item, params, info):

state_table = {

# Define your state table here

}

for line in info:

# Parse the line and extract relevant information

if line[0] == item:

# Perform calculations and checks

# Return the status and the output message

return params[status], "[Output message]"

check_info["tintri_vmstore"] = {

"check_function": check_tintri_vmstore,

"group": "tintri_vmstore",

"inventory_function": inventory_tintri_vmstore,

"service_description": "Tintri VMstore %s",

"default_levels_variable": "tintri_vmstore_levels",

"snmp_scan_function": lambda oid: '1.3.6.1.4.1.41456' in oid(".1.3.6.1.2.1.1.2.0"),

"snmp_info": (".1.3.6.1.4.1.41456.1.2", [

"1", # vmstoreHostname

"2.1.46", # spaceUsedPhysicalGiB

"2.1.47", # spaceUsedSnapshotsHypervisorPhysicalGiB

"2.1.48", # spaceUsedLivePhysicalGiB

"2.1.49", # spaceUsedSnapshotsTintriPhysicalGiB

"2.1.50", # spaceUsedOtherPhysicalGiB

"2.1.51", # spaceRemainingPhysicalGiB

"2.1.52", # spaceTotalGiB

"2.1.53", # snapshotSavingsFactor

"2.1.54", # spaceSavingsFactor

"2.1.55", # spaceSavingsFactorIncludingSnapshotSavings

"2.1.56", # totalSpaceSavingsIncludingThinProvisioningFactor

"2.1.57", # performanceReserveUsed

"2.1.58", # performanceReserveRemaining

"2.1.18", # throughput

"2.1.19", # lowThroughput

"2.1.20", # highThroughput

"2.1.21", # iops

"2.1.22", # lowIops

"2.1.23", # highIops

"2.1.24", # latency

"2.1.25", # hostLatency

"2.1.26", # networkLatency

"2.1.27", # fsLatency

"2.1.28", # diskLatency

"2.1.29", # cacheHitRatio

"2.1.30", # replLinkCount

"2.1.31", # replOutgoingCount

"2.1.32", # replIncomingCount

"2.1.33", # replBytesRemaining

"2.1.34", # replSrcLogicalThroughput

"2.1.35", # replSrcPhysicalThroughput

"2.1.36", # replDstLogicalThroughput

"2.1.37", # replDstPhysicalThroughput

"2.1.38", # replSrcLogicalReplicatedPerDay

"2.1.39", # replDstLogicalReplicatedPerDay

"2.1.40", # replSrcPhysicalReplicatedPerDay

"2.1.41", # replDstPhysicalReplicatedPerDay

"2.1.42", # mirrorLatency

"2.1.43", # mirrorReadLatency

"2.1.44", # mirrorWriteLatency

"2.1.45", # mirrorWriteNetworkLatency

"2.1.17", # daysTillFull

"2.1.11", # orphanedSpace

"2.1.5", # swVersion

"2.1.4", # model

"2.1.3", # uptime

"2.1.6", # serialNumber

"2.1.7", # vmStoreStatus

"2.1.59", # totalNfsConnectionCount

"2.1.61", # managedObjectCount

"2.1.62", # numFiles

"2.1.63", # maxFiles

OID_END

]),

}

and I have another:

#!/usr/bin/env python3

from cmk.base.plugins.agent_based.agent_based_api.v1 import exists, register, SNMPTree, Service, Result, State

def parse_tintri_vmstore(string_table):

mib = {

1: ('vmstoreHostname'),

2: ('spaceUsedPhysicalGiB', '1.46'),

3: ('spaceUsedSnapshotsHypervisorPhysicalGiB', '1.47'),

4: ('spaceUsedLivePhysicalGiB', '1.48'),

5: ('spaceUsedSnapshotsTintriPhysicalGiB', '1.49'),

6: ('spaceUsedOtherPhysicalGiB', '1.50'),

7: ('spaceRemainingPhysicalGiB', '1.51'),

8: ('spaceTotalGiB', '1.52'),

9: ('snapshotSavingsFactor', '1.53'),

10: ('spaceSavingsFactor', '1.54'),

11: ('spaceSavingsFactorIncludingSnapshotSavings', '1.55'),

12: ('totalSpaceSavingsIncludingThinProvisioningFactor', '1.56'),

13: ('performanceReserveUsed', '1.57'),

14: ('performanceReserveRemaining', '1.58'),

15: ('throughput', '1.18'),

16: ('lowThroughput', '1.19'),

17: ('highThroughput', '1.20'),

18: ('iops', '1.21'),

19: ('lowIops', '1.22'),

20: ('highIops', '1.23'),

21: ('latency', '1.24'),

22: ('hostLatency', '1.25'),

23: ('networkLatency', '1.26'),

24: ('fsLatency', '1.27'),

25: ('diskLatency', '1.28'),

26: ('cacheHitRatio', '1.29'),

27: ('replLinkCount', '1.30'),

28: ('replOutgoingCount', '1.31'),

29: ('replIncomingCount', '1.32'),

30: ('replBytesRemaining', '1.33'),

31: ('replSrcLogicalThroughput', '1.34'),

32: ('replSrcPhysicalThroughput', '1.35'),

33: ('replDstLogicalThroughput', '1.36'),

34: ('replDstPhysicalThroughput', '1.37'),

35: ('replSrcLogicalReplicatedPerDay', '1.38'),

36: ('replDstLogicalReplicatedPerDay', '1.39'),

37: ('replSrcPhysicalReplicatedPerDay', '1.40'),

38: ('replDstPhysicalReplicatedPerDay', '1.41'),

39: ('mirrorLatency', '1.42'),

40: ('mirrorReadLatency', '1.43'),

41: ('mirrorWriteLatency', '1.44'),

42: ('mirrorWriteNetworkLatency', '1.45'),

43: ('daysTillFull', '1.17'),

44: ('orphanedSpace', '1.11'),

45: ('swVersion', '1.5'),

46: ('model', '1.4'),

47: ('uptime', '1.3'),

48: ('serialNumber', '1.6'),

49: ('vmStoreStatus', '1.7'),

50: ('totalNfsConnectionCount', '1.59'),

51: ('managedObjectCount', '1.61'),

52: ('numFiles', '1.62'),

53: ('maxFiles', '1.63'),

}

parsed = {}

for index, value in enumerate(string_table[0], start=1):

oid_info = mib.get(index, None)

if oid_info:

oid_name, oid_suffix = oid_info if isinstance(oid_info, tuple) else (oid_info, '')

parsed[oid_name] = value if not oid_suffix else f"{value}.{oid_suffix}"

return parsed

def discover_tintri_vmstore(section):

yield Service()

def check_tintri_vmstore(item, params, section):

for key, value in section.items():

if value == '':

value = 'No data provided'

yield Result(state=State.OK, summary=f"{key}: {value}")

register.snmp_section(

name='tintri_vmstore',

detect=exists('.1.3.6.1.4.1.41456.1.2'),

fetch=SNMPTree(

base='.1.3.6.1.4.1.41456.1.2',

oids=[

'1', # vmstoreHostname

'2.1.46', # spaceUsedPhysicalGiB

'2.1.47', # spaceUsedSnapshotsHypervisorPhysicalGiB

'2.1.48', # spaceUsedLivePhysicalGiB

'2.1.49', # spaceUsedSnapshotsTintriPhysicalGiB

'2.1.50', # spaceUsedOtherPhysicalGiB

'2.1.51', # spaceRemainingPhysicalGiB

'2.1.52', # spaceTotalGiB

'2.1.53', # snapshotSavingsFactor

'2.1.54', # spaceSavingsFactor

'2.1.55', # spaceSavingsFactorIncludingSnapshotSavings

'2.1.56', # totalSpaceSavingsIncludingThinProvisioningFactor

'2.1.57', # performanceReserveUsed

'2.1.58', # performanceReserveRemaining

'2.1.18', # throughput

'2.1.19', # lowThroughput

'2.1.20', # highThroughput

'2.1.21', # iops

'2.1.22', # lowIops

'2.1.23', # highIops

'2.1.24', # latency

'2.1.25', # hostLatency

'2.1.26', # networkLatency

'2.1.27', # fsLatency

'2.1.28', # diskLatency

'2.1.29', # cacheHitRatio

'2.1.30', # replLinkCount

'2.1.31', # replOutgoingCount

'2.1.32', # replIncomingCount

'2.1.33', # replBytesRemaining

'2.1.34', # replSrcLogicalThroughput

'2.1.35', # replSrcPhysicalThroughput

'2.1.36', # replDstLogicalThroughput

'2.1.37', # replDstPhysicalThroughput

'2.1.38', # replSrcLogicalReplicatedPerDay

'2.1.39', # replDstLogicalReplicatedPerDay

'2.1.40', # replSrcPhysicalReplicatedPerDay

'2.1.41', # replDstPhysicalReplicatedPerDay

'2.1.42', # mirrorLatency

'2.1.43', # mirrorReadLatency

'2.1.44', # mirrorWriteLatency

'2.1.45', # mirrorWriteNetworkLatency

'2.1.17', # daysTillFull

'2.1.11', # orphanedSpace

'2.1.5', # swVersion

'2.1.4', # model

'2.1.3', # uptime

'2.1.6', # serialNumber

'2.1.7', # vmStoreStatus

'2.1.59', # totalNfsConnectionCount

'2.1.61', # managedObjectCount

'2.1.62', # numFiles

'2.1.63', # maxFiles

],

),

parse_function=parse_tintri_vmstore,

)

register.check_plugin(

name='tintri_vmstore',

service_name='Tintri VMstore',

discovery_function=discover_tintri_vmstore,

check_function=check_tintri_vmstore,

check_default_parameters={}

)

CMK version: 2.2.0p23

OS version: CMA (1.5+) 64-bit

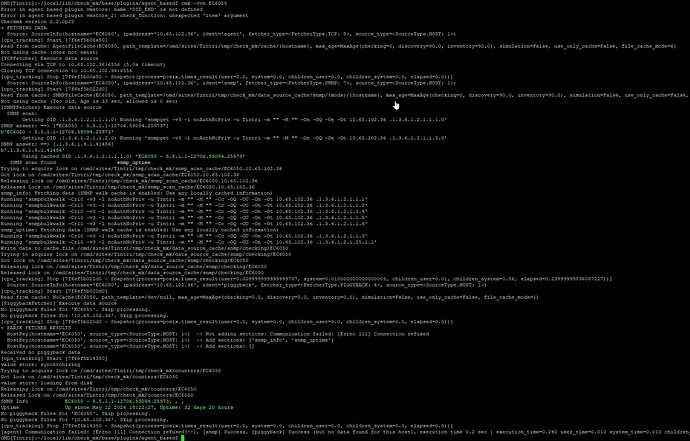

Output of “cmk --debug -vvn hostname”:

Thanks for the help and pls have in mind I’m very beginner with CheckMK.

Thanks for your help!